Content moderation protects our freedom of expression on the internet from morphing into anything dangerously sinister…or does it? Evan Yoshimoto (MPP 2021) exposes the racial undertones of online content moderation and its role in suppressing minority voices. In our increasingly digital world, legislation must reflect this ugly truth.

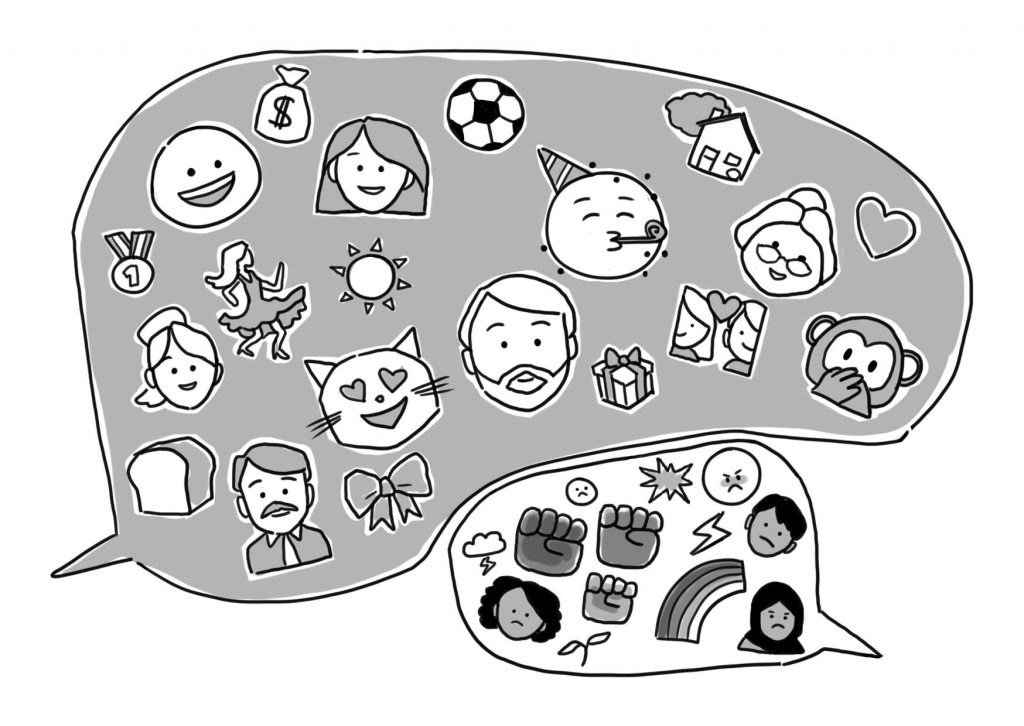

“Mark Zuckerberg hates black people. Facebook’s secret censorship rules protect white men from hate speech but not black children.” – These are the headlines that appear when you search the internet for a more critical lens on how tech giants are regulating hate speech on social media platforms through content moderation. The ugly truth is revealed in these articles: content moderation is ineffective in addressing hate speech when regulation does not consider the social, political, and historical context of the content being reported. When this context is not considered, it can and does perpetuate societal discrimination against those who live on the margins of society and are acting to change their position using social media.

Regulating hate speech through content moderation requires social media platforms such as Facebook, Twitter, and YouTube to view and remove any content that is considered by governing regulation to be illegal hate speech. These platforms are required to abide by the content moderation regulation laws within the state or regulatory body that they are operating in. In a globalised social media network where content can be accessed across borders, these companies have enormous influence on “who has the ability to speak and what content can be shared on their platform”. Essentially, they have a monopoly on the debate of what is considered free speech versus hate speech on social media, and this has implications for activists all across the world.

Let us look at Germany’s NetzDG as an example of content moderation enforced by state regulation. Largely considered to be the strictest legislation of its kind, NetzDG addresses various forms of online hate speech in Germany by forcing social media companies with more than two million members “to remove ‘obviously illegal’ posts within 24 hours or risk fines of up to €50 million”. “Obviously illegal” sounds, well, obvious… right?

Unfortunately, with the risk of incurring heavy fines and without a clear definition of what constitutes hate speech, NetzDG faces accusations of censoring legitimate speech, “including satire and political speech, without any remedy”. From July to December 2018, NetzDG reportedly removed a total of 79,860 illegal posts that were reported, but after a flagged instance where Twitter took down the account of the German satirical magazine Titanic for publishing a series of posts aimed at far-right politics, it is unclear whether the context for all this content removed was considered. Both the Lesbian and Gay Federation in Germany and the Central Council of Jews in Germany are in agreement – this type of regulation is not as effective in removing online hate speech content as it could be, especially when it comes to content that deals with social marginalisation.

According to the Association for Progressive Communication, “censorship is increasingly being implemented by private actors, with little transparency or accountability, [which] disproportionately impacts groups and individuals who face discrimination in society, in other words, groups who look to social media platforms to amplify their voices, form associations, and organise for change.” Social media companies and regulators are taking the one-size-fits-all approach when developing hate speech standards for content moderation, which disproportionately impacts marginalised communities who are pushing for racial justice.

Algorithms – and the humans that develop them with racial biases and flawed, non-representative data – perpetuate this cycle of marginalisation on social media platforms. On Twitter, tweets created by African Americans are one and a half times more likely to be flagged as hate speech under leading artificial intelligence models. On Facebook, many black activists have their content taken down and accounts blocked when they publicly advocate for better treatment of black people in the United States, a country where anti-black racism is still widely prevalent. Even more troubling, when these activists had their white friends post the same exact content, their posts were allowed to remain online with no penalty. Racial biases and the divorce of context from content are clear determining factors in whether or not content that is reported as hate speech is removed.

In order to improve addressing hate speech through content moderation, social media companies must create better avenues for user feedback that allows them to better understand the contextual elements of the content that they are moderating. As a starting point, regulation should be guided by the Santa Clara Principles, which outline minimal levels of transparency and accountability that social media platforms should abide by when moderating content, including the provision of a timely appeal process for users facing any content removal or account suspension. It is imperative that the contextual nuances of the content addressed in these user appeals is taken into consideration within the content moderation guidelines that address online hate speech going forward. Until this happens, these regulations will continue to censor and disempower social movements led by marginalised groups who are pushing for fairness, equality, and justice.

Content moderation does not serve users when it leads to political censorship. Facebook has at least acknowledged this “mistake”. Regulators and platforms must make the necessary changes to ensure that marginalized people feel as if they belong on these digital platforms, as well as the change-making content they put out there – because they do.

A version of this article has been published at www.digitalservicesact.eu in context of the author’s participation in a course-based project on the Digital Services Act at the Hertie School.

Evan Yoshimoto is a first-year MPP student at Hertie. Graduating from UC Berkeley and currently working in data and technology politics, he is interested in bringing more diversity, inclusion, equity, and justice initiatives to the field. His past work experience and research interests include topics of climate justice, sustainable economics, data science, and anti-oppression work more broadly.